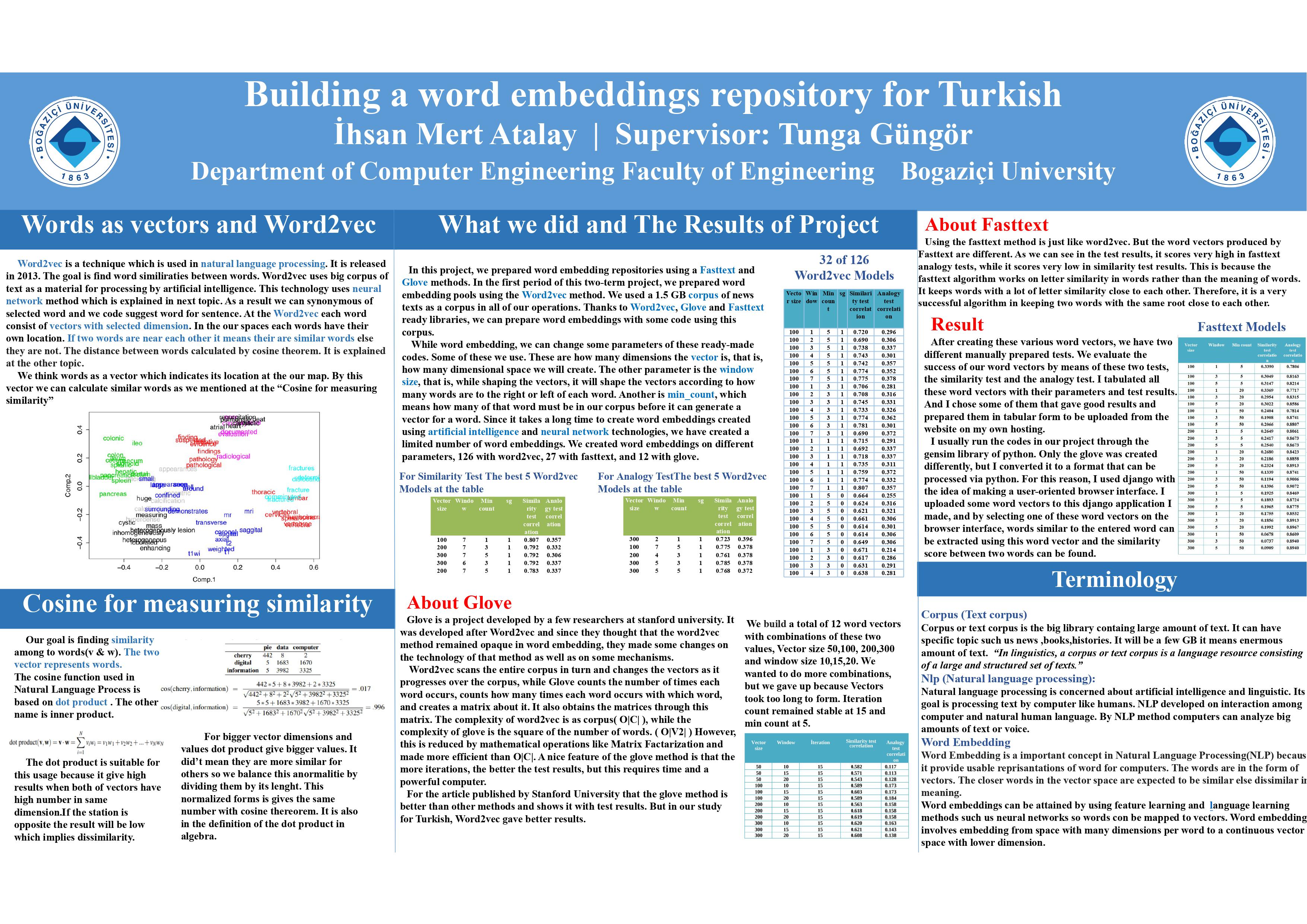

Building a word embeddings repository for Turkish

In this project, we aim at building a comprehensive word embedding repository for the Turkish language.

In this project, we aim at building a comprehensive word embedding repository for the Turkish language. Using each of the state-of-the-art word embedding methods, embeddings of all the words in the language will be formed using a corpus. First, the three commonly-used embedding methods (Word2Vec, Glove, Fasttext) will be used and an embedding dictionary for each one will be formed. Then we will continue with context-dependent embedding methods such as BERT and Elmo. Each method will be applied with varying parameters such as different corpora and different embedding dimensions. In this way, at the end of the project we will obtain an embedding repository for Turkish which will be quite useful for deep learning-based natural language processing applications.

This is a 2-semesters project.