SERVE: See - Listen - Plan - Act

Serve: See – Listen – Plan – Act project enables human-robot interaction by speech recognition and object detection.

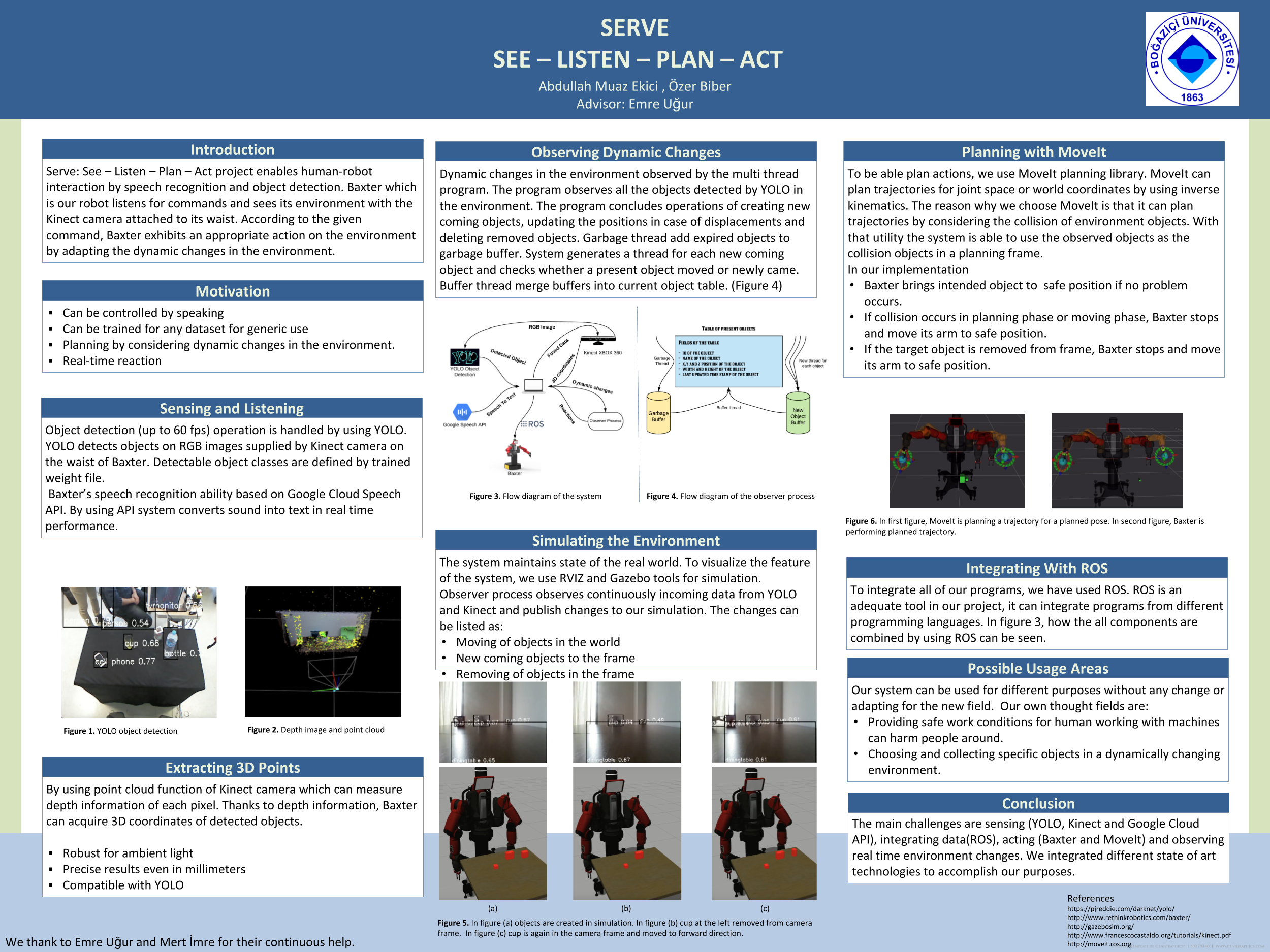

Serve: See – Listen – Plan – Act project enables human-robot interaction by speech recognition and object detection. Baxter which is our robot listens for commands and sees its environment with the Kinect camera attached to its waist. According to the given command, Baxter exhibits an appropriate action on the environment by adapting the dynamic changes in the environment.