Learning Sequences Using Recurrent Neural Networks

Songs, poems, books, movies, dialogues are just some examples of data found in a sequential form.

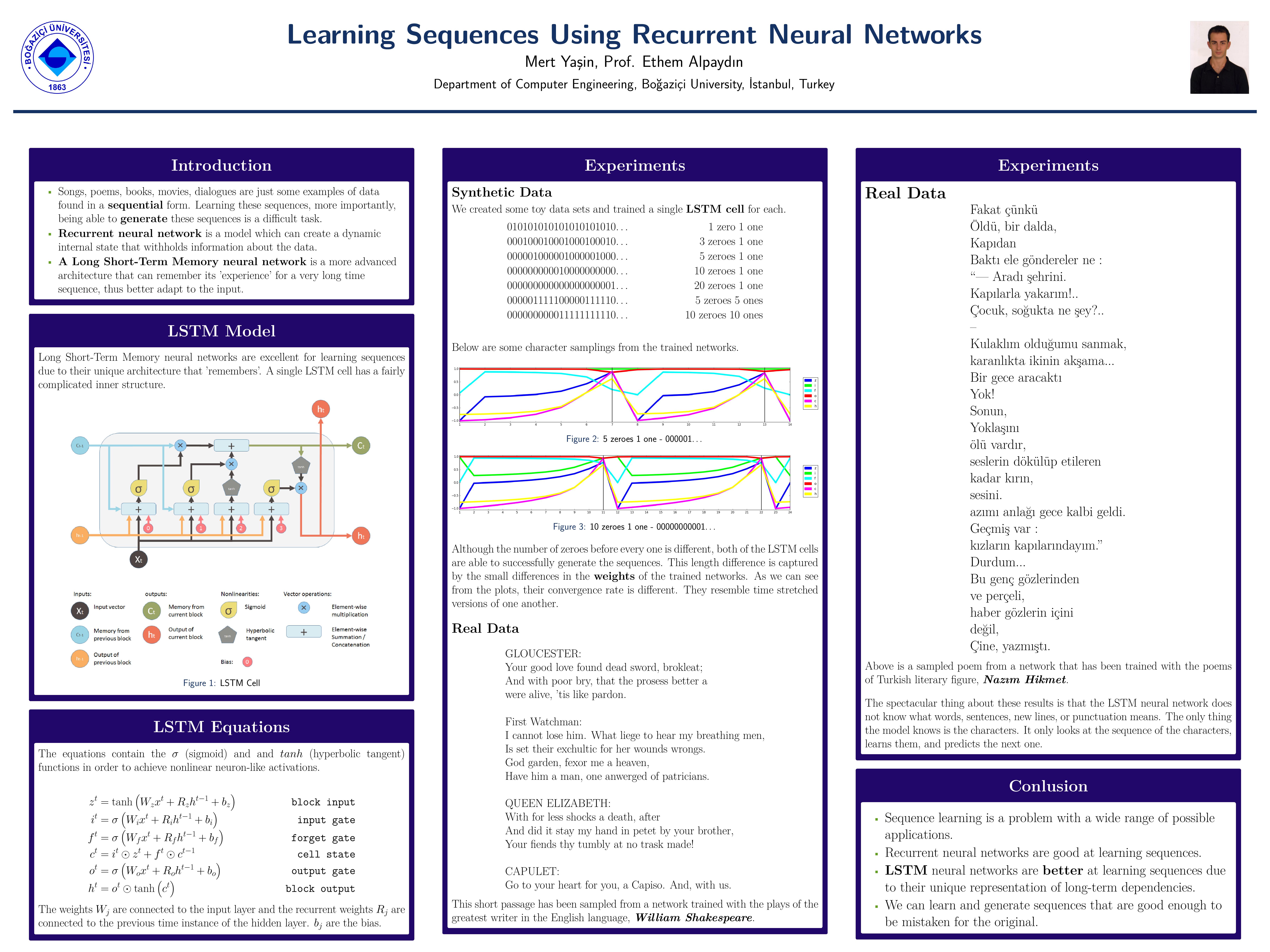

Songs, poems, books, movies, dialogues are just some examples of data found in a sequential form. Learning these sequences, more importantly, being able to generate these sequences is a difficult task. Recurrent neural network is a model which can create a dynamic internal state that withholds information about the data. A Long Short-Term Memory neural network is a more advanced architecture that can remember its ’experience’ for a very long time sequence, thus better adapt to the input. This research in-depth analyzes the structure of the LSTM neural network. We have trained several networks for generating Nazım Hikmet poems and William Shakespeare plays. We have also fabricated some toy data-sets in order to better understand how the information is stored within the LSTM cell.